1. Project description

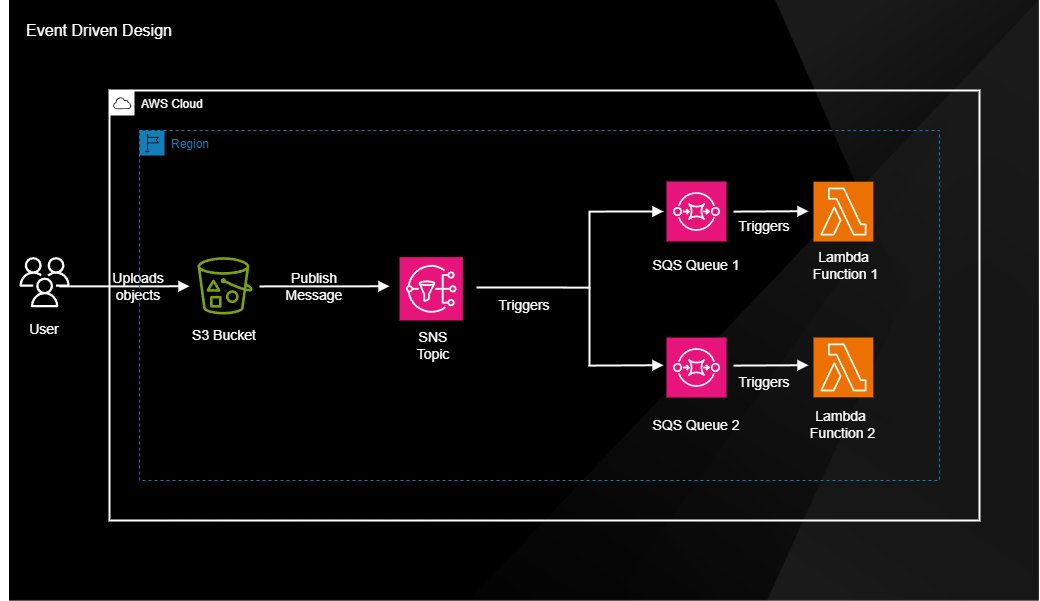

In this project I am creating an event driven architecture. For the event producer we're going to use S3 with event notification. The event ingestion will be done with a SNS. For the event stream we will use a SQS and for the event consumers we're going to use Lambda functions. This architectures offers server advantages:

- Scalability: Event-driven designs can easily scale to accommodate changing workloads. As the number of events or the complexity of processing increases, you can add more event consumers (Lambda functions in this case) or scale up existing ones without significant reconfiguration.

- Loose Coupling: Event-driven architectures promote loose coupling between components. Each component interacts with the event stream, making it independent of the others. This independence allows for easier maintenance, updates, and replacements of individual components without affecting the entire system.

- Flexibility: Event-driven systems are flexible and adaptable. You can introduce new event producers or consumers without disrupting the existing architecture. This makes it easier to expand your system's capabilities as your requirements evolve.

- Fault Tolerance: Event-driven systems are inherently fault-tolerant. If a component or consumer fails, events are not lost; they remain in the event stream until successfully processed. This ensures data reliability and system resilience.

- Real-time Processing: Event-driven architectures enable real-time or near-real-time processing of events as they occur. This is crucial for use cases where timely responses are essential, such as processing user interactions, monitoring, and alerting.

- Cost Efficiency: AWS services like Lambda, SNS, and SQS offer a pay-as-you-go pricing model, allowing you to optimize costs based on actual usage. You don't need to provision and maintain servers continuously, reducing infrastructure overhead.

- Event Traceability: Events in an event-driven system are traceable, making it easier to monitor and troubleshoot issues. You can track the flow of events and analyze logs to identify bottlenecks or failures.

- Event Sourcing: Event-driven architectures can be used for event sourcing, where events represent a complete history of changes to a system's state. This is valuable for auditing, compliance, and rebuilding system state from events if necessary.

- Security and Isolation: With proper IAM (Identity and Access Management) configurations, you can enforce fine-grained access control and isolation between components. This enhances security and ensures that only authorized components can access and process events.

1.1 Technologies:

- S3

- SNS

- SQS

- Lambda

2. Create S3 Bucket (Event producer)

- Bucket name: s3edd.

- Other settings: Default.

3. Create SNS topic (Event Ingestion)

We need an IAM role with permission (for DynamoDB & CW logs) and attach it to the Lambda function in order to perform the operations.

- Type: Standard (better for delivery).

- Name: topicedd.

-

Access policy: Allows just 2 permissions - one allows S3 bucket to publish messages and two our S3 to subscribe to our topic.

{ "Version": "2012-10-17", "Id": "__default_policy_ID", "Statement": [ { "Sid": "__default_statement_ID", "Effect": "Allow", "Principal": { "Service": "s3.amazonaws.com" }, "Action": "SNS:Publish", "Resource": "arn:aws:sns:eu-central-1::topicedd", "Condition": { "StringEquals": { "aws:SourceAccount": " " } } }, { "Sid": "sqs_statement", "Effect": "Allow", "Principal": { "Service": "sqs.amazonaws.com" }, "Action": "sns:Subscribe", "Resource": "arn:aws:sns:eu-central-1: :topicedd", "Condition": { "ArnEquals": { "aws:SourceArn": [ "arn:aws:sqs:eu-central-1: :sqsedd1", "arn:aws:sqs:eu-central-1: :sqsedd2" ] } } } ] }

4. Create SQS (Event Stream)

We're going to create two SQS.'

- Type: Standard.

- Name: sqsedd1/sqsedd2

-

Access policy: Allows just 2 permissions - one allow s3 bucket to publish messages and two our sqs to subscribe to our topic.

{ "Version": "2012-10-17", "Id": "__default_policy_ID", "Statement": [ { "Sid": "AllowLambdaToSQS", "Effect": "Allow", "Principal": { "Service": "lambda.amazonaws.com" }, "Action": [ "sqs:ReceiveMessage", "sqs:SendMessage" ], "Resource": "arn:aws:sqs:eu-central-1::sqsedd1", "Condition": { "ArnEquals": { "aws:SourceArn": "arn:aws:lambda:eu-central-1: :lambdaedd1" } } }, { "Sid": "AllowSNSToSQS", "Effect": "Allow", "Principal": { "AWS": "*" }, "Action": "sqs:SendMessage", "Resource": "arn:aws:sqs:eu-central-1: :sqsedd1", "Condition": { "ArnLike": { "aws:SourceArn": "arn:aws:sns:eu-central-1: :topicedd" } } } ] }

5. Create Lambda functions

Here we will create the two Lambda functions.

- Name: lambdaedd1/lambdaedd2.

- Runtime: 3.7.

-

Execution role: Create new role with basic Lambda permissions.

-

Role policy: lambdaeddpolicy1

{ "Version": "2012-10-17", "Statement": [ { "Sid": "VisualEditor0", "Effect": "Allow", "Action": [ "sqs:DeleteMessage", "logs:CreateLogStream", "sqs:ReceiveMessage", "sqs:GetQueueAttributes", "logs:PutLogEvents" ], "Resource": [ "arn:aws:sqs:eu-central-1::sqsedd[1/2]", "arn:aws:logs:eu-central-1: :log-group:/aws/lambda/lambdaedd[1/2]:*" ] }, { "Sid": "VisualEditor1", "Effect": "Allow", "Action": [ "sqs:ReceiveMessage", "logs:CreateLogGroup" ], "Resource": [ "arn:aws:logs:eu-central-1: :*", "arn:aws:sqs:eu-central-1: :sqsedd[1/2]" ] } ] }

-

Role policy: lambdaeddpolicy1

6. Connect all 4 components

6.1 S3 bucket to SNS topic

We need to create an event notification and select the SNS topic.

- Eventname: s3eventnotification

- Object creation: All object create events.

- Destination: SNS topic - topicedd.

6.2 SNS topic to SQS

Create subscription with subcription filter policy for the two SQS queues. in the policy for sqsedd1 we will check for PUT event and in the sqsedd2 we will use the COPY event.

- Protocol: Amazon SQS.

- Endpoint: sqsedd1/sqsedd2.

-

Subscription filter policy:

-

Role policy:

{ "Records": { "eventName": [ "ObjectCreated:[Put/Copy]" ] } }

-

Role policy:

6.3 SQS to Lambda

Now we add the Lambda function to the respective SQS queue.

7. Testing the setup

To test our setup, we will upload a file to our S3 bucket and check the Cloud Watch logs of the Lambda function.